Could This Computer Be the End of the Data Center as We Know It?

The ominous lyrics from Pink Floyd's song seem to be playing in the background:

Welcome my son, welcome to the machine.

Where have you been? It's alright we know where you've been.

Could they have chosen a more 1984-esque name than "The Machine"?

With the latest news from HP, most people are claiming how this will revolutionize the "Internet of Things" (The latest buzzword for the hyper-connectedness of the 21st century).

But, for those of us in the IT, HVAC and Electrical Industries, the wheels are already turning in another direction.

"This computer has 80 times LESS power consumption? How will this affect power distribution needs?

"The Machine is at least six times MORE powerful than current servers? It can process 160 petabytes in 250 nanoseconds?" How drastically will this shrink data center size?

"How will current ethernet and fiber networks handle the obscenely large amounts of data this device will demand?"

Running in Circles

There's been a big cat and mouse game going on in the data center industry for decades.

Users demand more data and more processing power, servers demand more power and cooling. Add more support equipment. Life is good.

For about 2 seconds.

Server manufacturers add more cores, IT techs add more RAM and bigger hard drives, and the cycle starts all over again.

So if you've even heard about the data center industry in the past 10 years (let alone worked in it), it's no surprise to you that data centers have been tipping the scale of power consumption towards maximum capacity.

We were all stunned when data centers accounted for 2% of the U.S. national power consumption several years ago, and that figure has only been climbing.

Everyone was trying to make the industry more efficient: Better cooling, more efficient power systems, free cooling, energy recovery, virtualization. The list goes on and on.

But it didn't make a dent in power consumption. The demand was too high, and basic server technology hadn't changed in decades.

Sure, we now have better virtual machines, multi core processores and more, but those iterations of the same concept (The Intel IA-32 Architecture).

What the industry needed was a revolutionary new computer that was simply X% more efficient, but XX times more efficient.

And it seems HP has delivered.

A True Game Changer

While the Machine isn't really a quantum computer (it is still based off of traditional digital architecture), it would represent a quantum leap in computing technology.

An 80-fold reduction in power consumption would mean far more could be done with far less (less electricity and less waste heat.)

A 6-fold increase in processing power would mean even "big data" would be chump change for this machine.

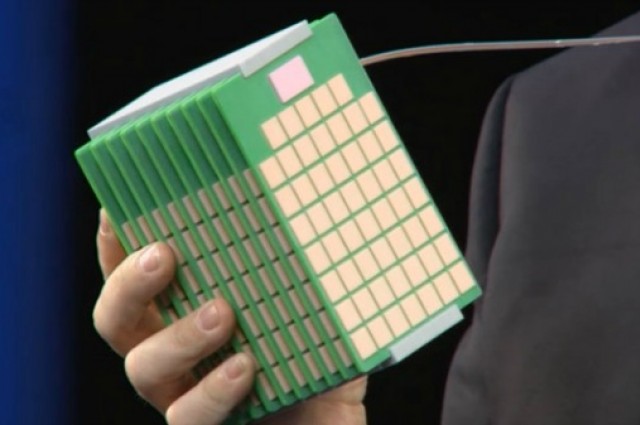

The numbers are hard to follow, at least to someone used to present day hardware specifications. Quad core computer? This thing has multi core clusters. 16GB RAM? Even a miniature version of this thing would have 100 Terabytes of memory.

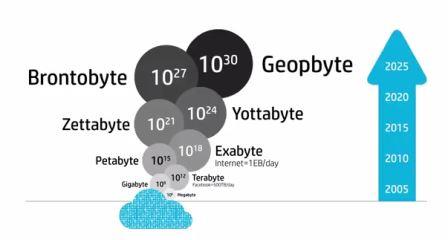

Just how powerful is that? Keep reading and check out the graphic below.

A computer like this could replace dozens (if not more of traditional servers), while still using less electricity. The possibilities are wide open.

Need more processing power? Start buying these. Want to save energy but don't need more capacity? Start buying these.

And you shouldn't have to wait too long, better prototypes of the device should be out next year, with production models available by 2018, according to HP.

If My Memory Serves Me

Take a look at the graphic below, and try and wrap your head around what HP sees as the future of computing and big data.

Ok, seriously, Exabyte? Brontobyte? And they expect this within 10 years? Amazing.

And what's even cooler is that The Machine isn't just a multi core processor, and it doesn't just have a huge amount of memory. It has both.

As long as HP can deliver an adequate bus structure, there shouldn't be any bottlenecks to this device's maximum speed.

Some Things Never Change

Some of the fastest computers of the 60s and 70s used integrated circuits (precursers to microprocessors) that generated incredible amounts of heat. Meaning, they were very, very inefficient.

Many experts believed that once microprocessors hit the mainstream in the mid 1970s, the electrical and heat loads of a data center would actually decrease, because newer devices were much more efficient.

Obviously, that didn't happen, as IT loads have only increased dramatically in the decades since.

Perhaps that should be a lesson to us all. Despite how remarkably powerful and efficient a new computer may be, history tells us we don't like to rest on our laurels. We are always pushing the boundaries of data and processing speed, and energy efficiency usually takes a back seat.

Will we reach a point where we don't "need" any more data, and we can implement fewer, more efficient servers such as "The Machine" from HP? Or will this device, despite its breakneck performance, increase our thirst for big data?

How will The Machine affect you and your customers? Is this the dawn of a golden age in Data Centers?

Comments

Found you on Twitter.

Found you on Twitter. Interesting new computer. Must admit that I reminds me of something from Star Trek. Sort of an abstract device without a lot of detail. This must be a mock up design (and not a very detailed one at that).